In the realm of serverless computing, the term “cold start” refers to the initial delay a serverless function experiences when it is invoked after a period of inactivity. For developers and businesses relying on rapid response times, understanding cold start behavior is crucial for delivering performant applications.

What is a Cold Start?

A cold start occurs when a serverless platform, like AWS Lambda, Azure Functions, or Google Cloud Functions, needs to initialize a new container or runtime environment to execute a function. This usually happens when:

- The function hasn’t been invoked recently.

- The provider scaled down idle instances to zero to save resources.

- The number of incoming requests exceeds the current number of warm instances.

Cold Start vs. Warm Start

- Cold Start: Involves setting up the runtime, downloading code and dependencies, and initializing application state. This can add latency ranging from 100ms to several seconds.

- Warm Start: The function is already in a ready-to-run state; the request is handled almost instantly.

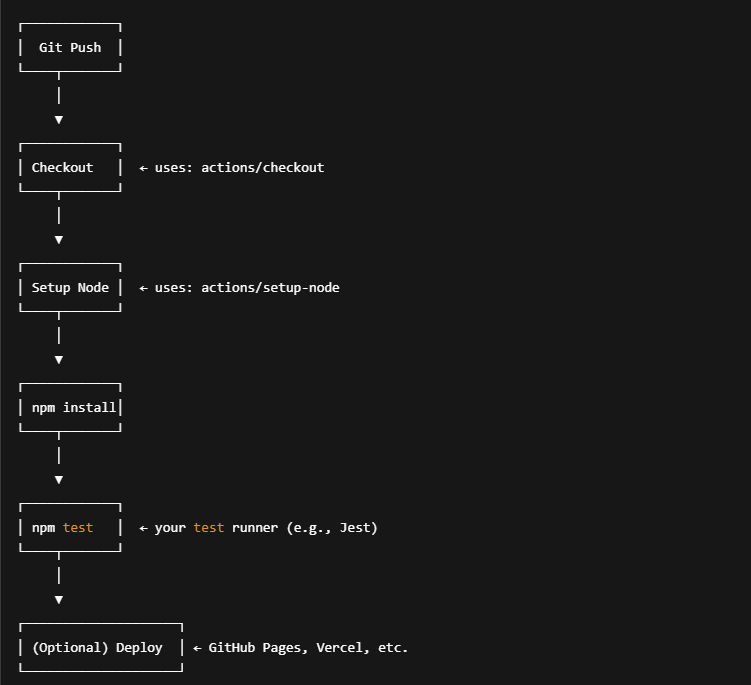

Image Prompt 1: Cold Start vs. Warm Start

Prompt: A comparison diagram of cold start vs. warm start in serverless computing. On one side, show a cold start with steps like “initialize runtime”, “load dependencies”, “start execution”. On the other, show a warm start with “execute immediately”. Clean and minimalistic design with icons.

Real-World Example: AWS Lambda

Consider a Node.js-based AWS Lambda function triggered by an HTTP request via API Gateway. If it hasn’t been used in a while, AWS must:

- Allocate a container.

- Boot up the Node.js runtime.

- Download your function code.

- Run any initialization code.

This process can take 500ms to 2 seconds depending on the size of the package and cold start factors.

Factors Affecting Cold Starts

- Language Choice: Compiled languages like Go or Java often have longer cold start times than Node.js or Python.

- Package Size: Larger packages take longer to load.

- VPC Configuration: Accessing a VPC can add extra time due to ENI (Elastic Network Interface) provisioning.

- Provisioning Strategy: Relying solely on on-demand scaling instead of using pre-warmed instances increases cold starts.

Mitigation Strategies

- Provisioned Concurrency (AWS): Keeps functions initialized and ready.

- Azure Premium Plan: Offers pre-warmed instances.

- Reduce Dependency Size: Trim unnecessary packages and use optimized libraries.

- Keep Functions Warm: Periodically invoke functions (e.g., using a scheduler) to prevent them from idling.

Image Prompt 2: Mitigation Techniques

Prompt: An infographic showing strategies to mitigate cold starts in serverless computing. Include icons for “Provisioned Concurrency”, “Reduce Dependencies”, “Keep Warm with Timer”, and “Lightweight Languages”.

Best Practices for Developers

- Split Monoliths: Break large functions into smaller ones.

- Asynchronous Design: Don’t block the user experience waiting for function initialization.

- Monitor Metrics: Use tools like AWS CloudWatch or Azure Application Insights to detect cold start patterns.

Conclusion

Cold starts are a natural trade-off in the flexibility of serverless computing. With careful planning, developers can minimize their impact and ensure high-performance applications even in unpredictable workloads.

Whether you’re building a chatbot, an IoT backend, or a web API, factoring cold starts into your architecture decisions will lead to smoother, more responsive user experiences.